Readability Isn’t Enough: Why LLMs Like ChatGPT Fall Short in Regulated Communication

Read Time 7 mins | Written by: Josh Levithan

The Attraction of AI Simplicity

The promise is amazing: Large Language Models (LLMs) like ChatGPT can simplify financial language in seconds. For overworked teams, that is the perfect shortcut to compliance.

But in regulated environments, shortcuts come with risk. Simplifying text may improve readability, but without context, control, or clear intent, it does not improve intelligibility. And it’s intelligibility, the ability to understand, apply, and act on information, that regulation actually requires.

What the Law Actually Requires

The Consumer Duty, the Consumer Rights Act, and judgments by the Court of Justice of the European Union all emphasise the same thing: it’s not enough for language to look simple, it must be intelligible.

“A term must not only make grammatical sense, but also allow the consumer to evaluate, on the basis of clear, intelligible criteria, the economic consequences for them.”

— CJEU, Case C-26/13

Intelligibility is also a regulatory requirement under the Financial Conduct Authority (FCA) Consumer Duty regulations:

A firm must ensure that a communication or a financial promotion:

• uses plain and intelligible language

• is easily legible

— FCA Handbook CONC 3.3.2

That’s the standard. Intelligibility, not readability. Understanding, in context, by real people making decisions.

Why Readability Isn’t Enough

Readability scores measure surface features, like sentence length and word difficulty. But in regulated communication, that’s not what matters.

Clarity isn’t about simpler words. It’s about whether the reader understands the context, the consequences, and what to do next.

This is where LLMs like ChatGPT fall short. They optimise for fluency, not for meaning, intent, or risk. A sentence might read smoothly, but still:

- Remove critical conditions

- Soften or distort obligations

- Lose the legal and behavioural implications behind key terms

The FCA’s own LLM study confirmed this. ChatGPT was tasked with simplifying financial terms to a lower reading age, but the result wasn’t just simpler language. In many cases, key meaning was lost, context dropped out, and tone became overly literal or simplistic.

Just because a sentence uses simpler words doesn’t mean it’s easier to understand.

Readability formulas reward fluency and brevity, but they ignore nuance, context, and the reader’s ability to apply what they’ve read. That’s the blind spot LLMs inherit when readability is hard-coded into their training data.

Why? Because readability frameworks often only measure surface traits: word and sentence length. They say nothing about whether a person can understand the content, act on it, or grasp its legal and financial implications.

Readability is not recognised in law. Intelligibility is.

It’s the benchmark in the Consumer Duty and the Consumer Rights Act, and it requires more than short sentences. It demands clarity in context, especially where rights, risks, and obligations are involved Built for Regulation: Amplifi’s Real-Time Intelligibility Engine

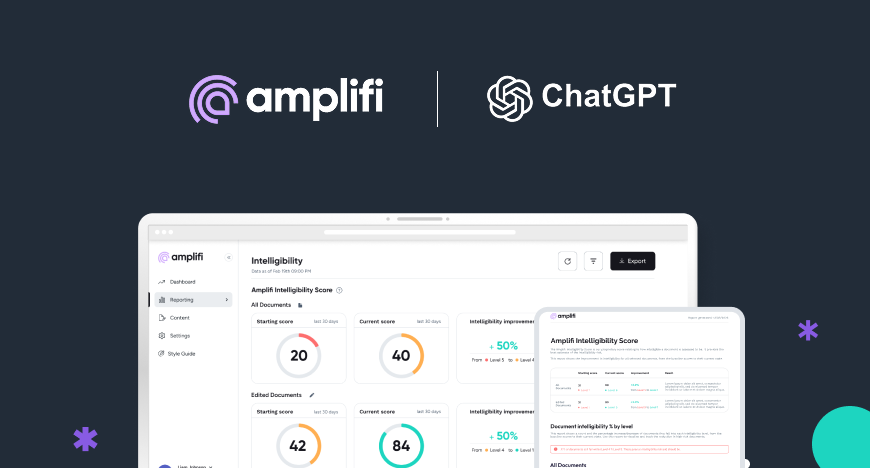

Built for Regulation: Amplifi’s Real-Time Intelligibility Engine

Amplifi vs ChatGPT: Built for Different Purposes

LLMs like ChatGPT are trained for general use, as Amplifi is designed for compliance environments.

|

Feature / Capability |

Amplifi |

ChatGPT |

Why It Matters |

|

Regulatory Intelligibility Scoring |

✓ |

✗ |

Amplifi scores against FCA, CMA, and Consumer Duty requirements. ChatGPT does not. |

|

Risk Flagging (e.g. language, sentences, etc) |

✓ |

✗ |

Amplifi highlights language that could cause consumer harm or fail audits. |

|

Guided Simplification |

✓ |

✗ |

Amplifi offers legally sound, compliance-focused improvements. |

|

Audit-Ready Reporting |

✓ |

✗ |

Amplifi produces version history, evidence trails, and board-ready reports. |

|

Governance & Team Sign-off |

✓ |

✗ |

Amplifi supports workflows across legal, comms, and compliance teams. |

Why We’re Speaking on This

Intelligibility isn’t just a concept we talk about. It’s something we’ve tested, measured, and validated, with regulators, academics, and thousands of real documents.

-

We built the first intelligibility model designed for regulation

Amplifi draws on its proprietary research in language, comprehension, design, navigation, accessibility, and engagement with over 50 years of readability studies, including Flesch-Kincaid, Gunning-Fog, and psycholinguistic models.

We’ve trained and refined our model using real-world financial disclosures, legal notices, and high-stakes documents. By combining behavioural testing, NLP, and machine learning, we can pinpoint where communication breaks down and what fixes it.

-

We’ve tested this in partnership with regulators

We’ve worked on multiple regulator-aligned studies; including a credit disclosure project in the FCA Sandbox and a legal guidance simplification project with the Solicitors Regulation Authority (SRA).

At the SRA, we partnered with Professor Richard Hyde (Law, Regulation and Governance - Nottingham University) to simplify regulatory guidance using Amplifi. The simplified versions were then tested with a diverse group of readers, using structured comprehension tasks and the Amplifi Multi-Level Comprehension Framework.

What we found:

- A measurable improvement in comprehension

- More consistent understanding across diverse reader groups

- Evidence of systematic reading. Where readers moved through the text more efficiently, skipped less, and required less rereading

This wasn’t just about retention or memory, but about how readers processed, understood, and applied the information in context.

-

We’ve shown Amplifi can predict understanding

In both FCA and SRA-aligned studies, Amplifi’s Multi-Level Comprehension Framework was used to test how readers understood, interpreted, and acted on real-world documents. The results closely matched Amplifi’s intelligibility scores, demonstrating that our model doesn’t just assess text for style or tone, but accurately predicts true comprehension.

What’s Lost Without Intelligibility

LLMs can support drafting. But simplification alone isn’t the goal. In regulated communication, it’s not enough.

When intelligibility isn’t measured, tested, or designed for, what’s lost is more than just clarity. You lose:

- Confidence that the message will be understood

- Control over how it’s interpreted or applied

- Accountability for outcomes that follow

In complex, high-stakes environments, words must do more than sound right, they must work in context, support decisions, and withstand scrutiny.

That’s the difference between surface simplicity and true clarity.

And that’s why Amplifi was built.